Ollama is not only powerful for command-line and API usage, but also supports integration with third-party user interfaces (UI), enabling you to interact with large language models (LLMs) in a more user-friendly way. This guide provides an overview of interaction methods and a step-by-step walkthrough for connecting Ollama with graphical frontends like Msty and similar tools.

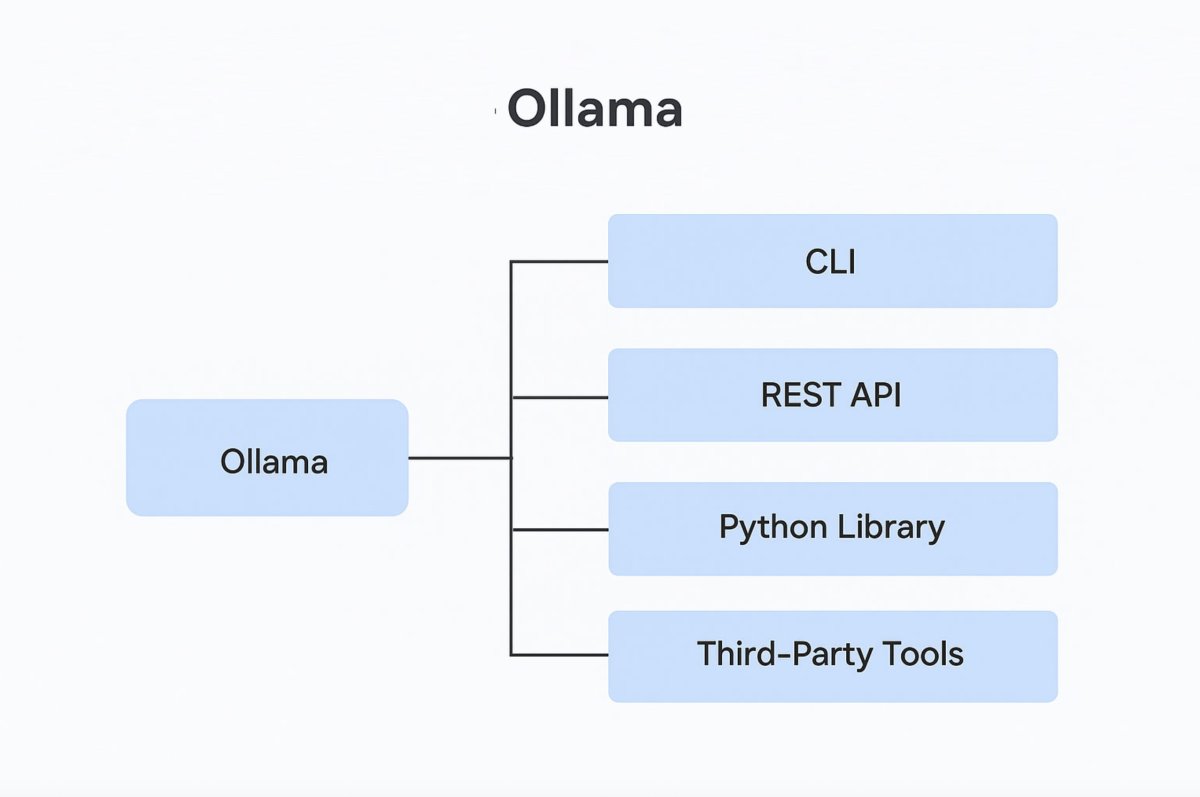

Overview: Ways to Interact with Ollama Models

Ollama hosts various large language models that you can use for building AI applications. There are multiple ways to interact with Ollama and its models:

- Command-Line Interface (CLI): The primary and most straightforward way to use Ollama. It allows you to manage models, generate text, and perform other tasks directly from your terminal.

- REST API: Ollama exposes a local REST API (http://127.0.0.1:11434), letting you build custom applications, scripts, or integrations programmatically.

- Python Library: Ollama offers a Python library for even more customizable and programmable interactions, perfect for application development and automation.

- Graphical User Interfaces (GUI)/Third-Party Tools: You can use GUI-based tools that connect to your local Ollama instance, providing a chat-like experience similar to web-based AI assistants, but running entirely on your own machine.

Integrating Ollama with Msty

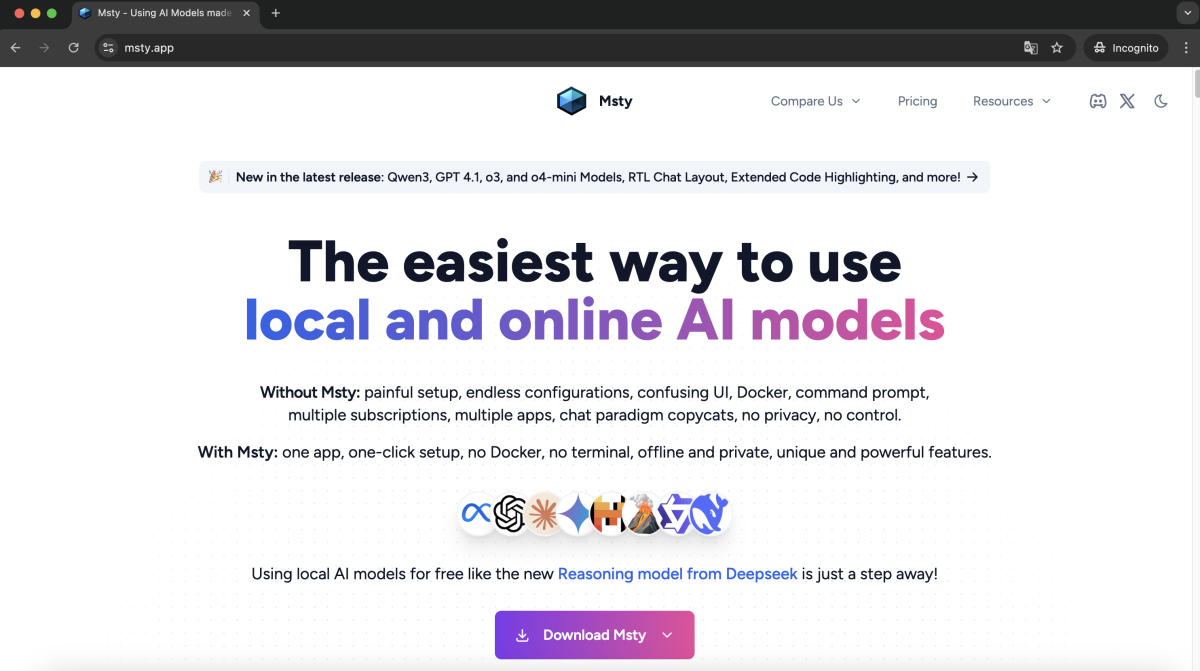

One popular way to interact with local Ollama models is by using user-friendly desktop applications that serve as frontends for your models. Here, we'll illustrate the process using Msty, but you can apply similar steps to other compatible tools.

Step 1 : Why Use a Third-Party UI like Msty?

- No need for complex setup, Docker, or terminal commands.

- Cross-platform: available for Windows, Mac, and Linux.

- Seamless local and private interactions with your own models.

- Easy integration of additional features like document and image upload.

Step 2 : Downloading and Installing Msty

- Visit the official Msty website and download the app for your OS (Windows, macOS Apple Silicon/Intel, or Linux).

- Install the app by following your system's standard application installation process.

- Open Msty after installation.

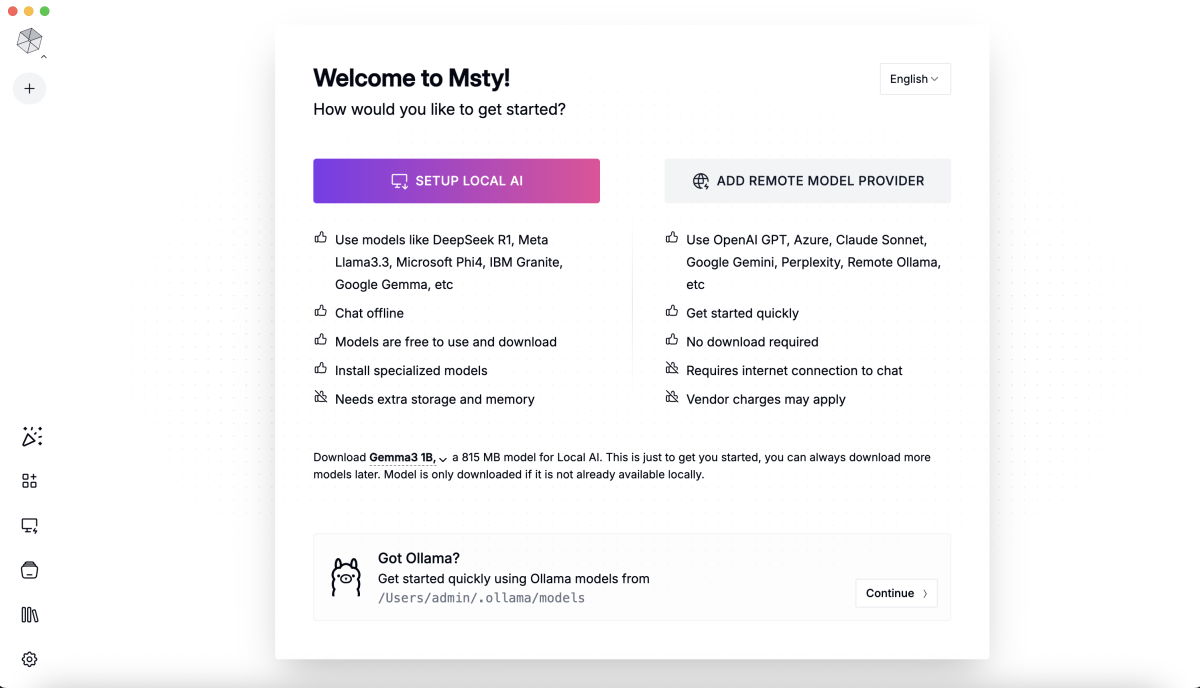

Step 3 : Connecting Msty to Your Local Ollama Models

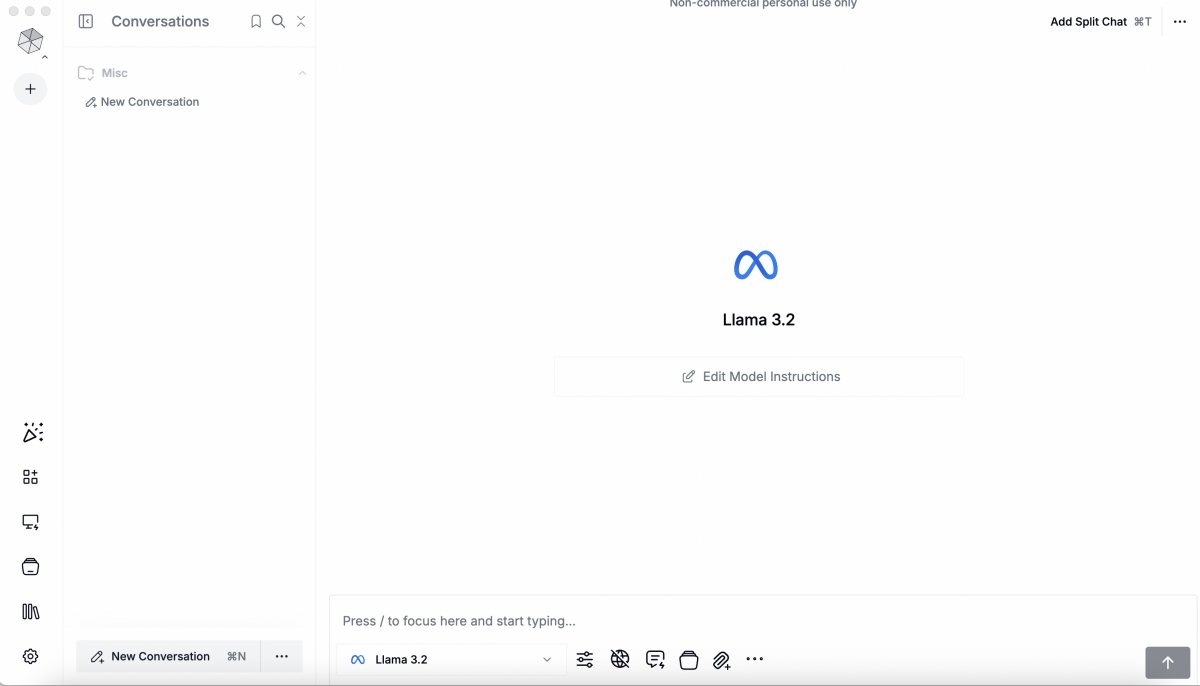

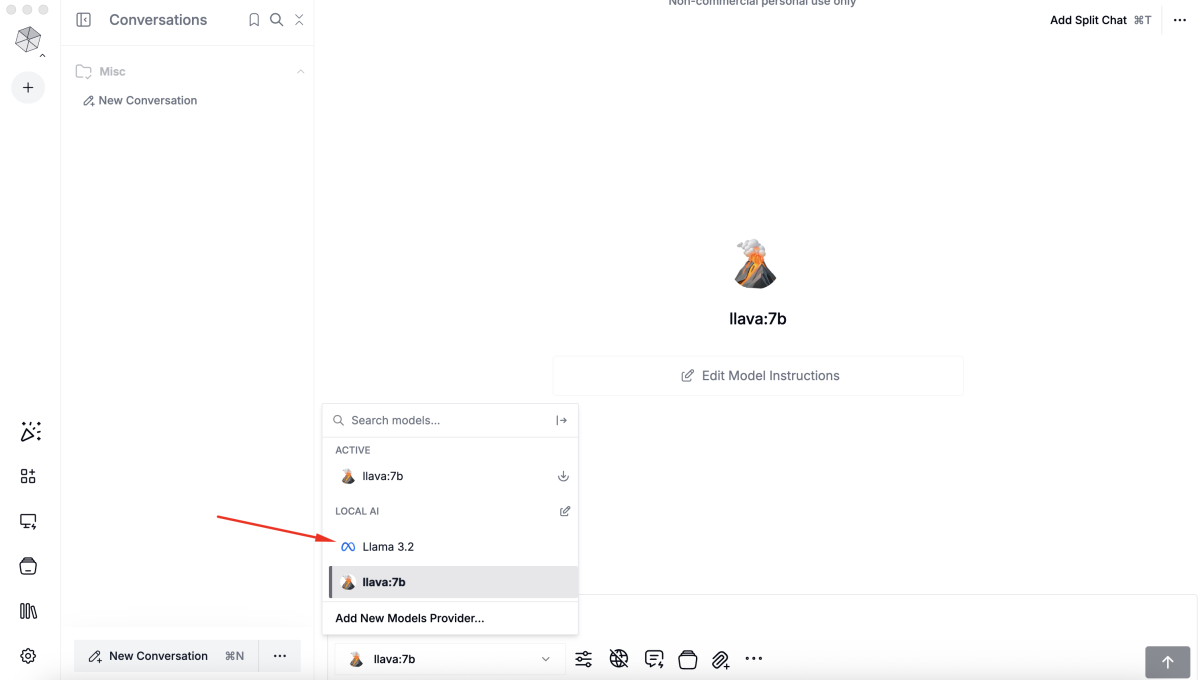

- When you launch Msty, it will automatically detect your locally installed Ollama models (e.g., Llama 3.2, Llava 7B) if Ollama is running and the models are present.

- Click "Continue" to proceed and finish connecting to your local Ollama models.

- Once completed, you can select a model and begin chatting with it in the Msty interface.

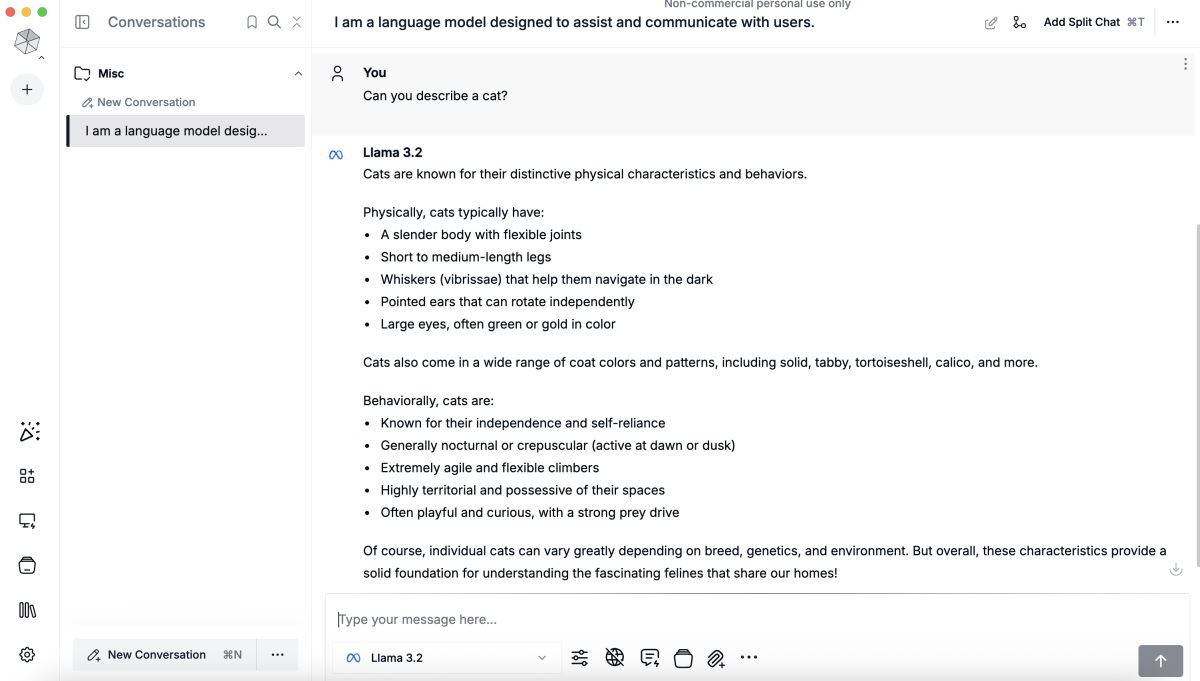

Step 4 : Chatting with Models in the UI

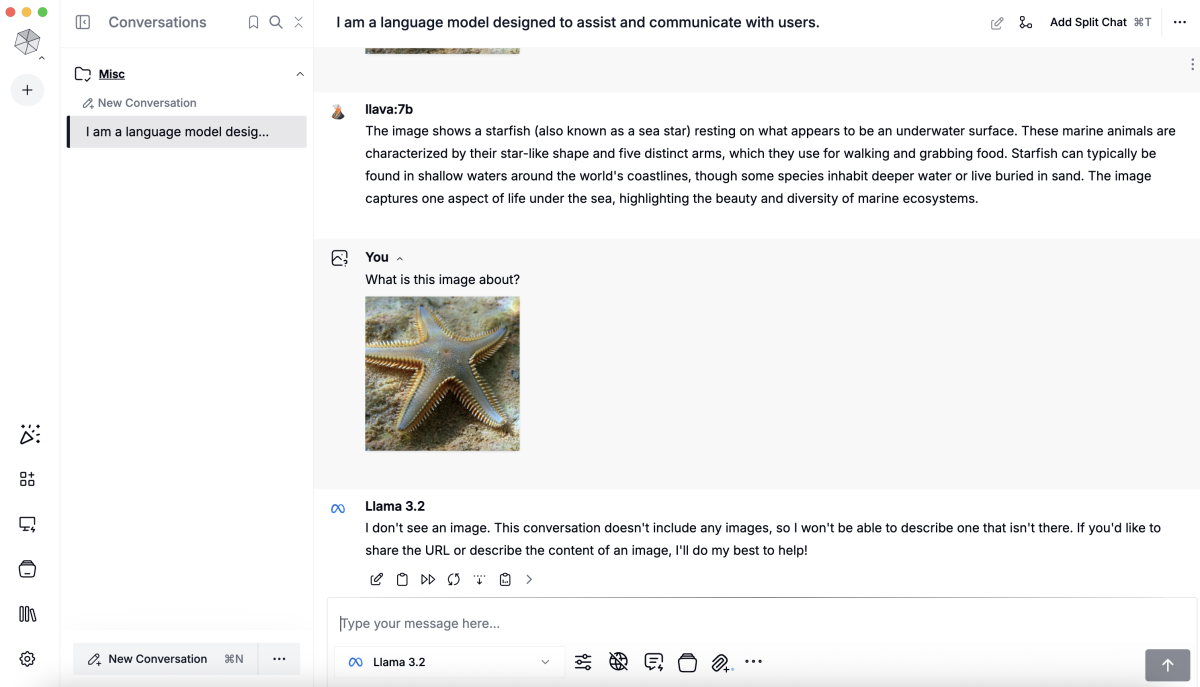

- Select a model from the list (e.g., Llama 3.2).

- Start chatting as you would in a typical AI chat app.

- Model responses are generated locally, ensuring privacy and no external API calls or usage fees.

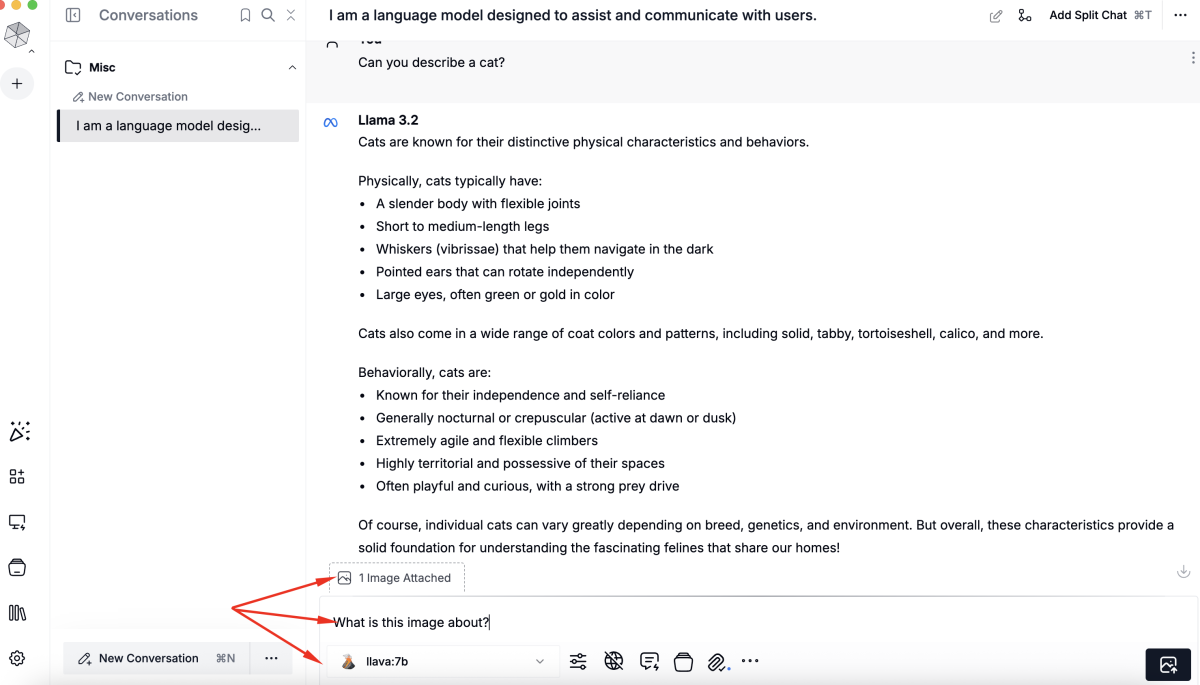

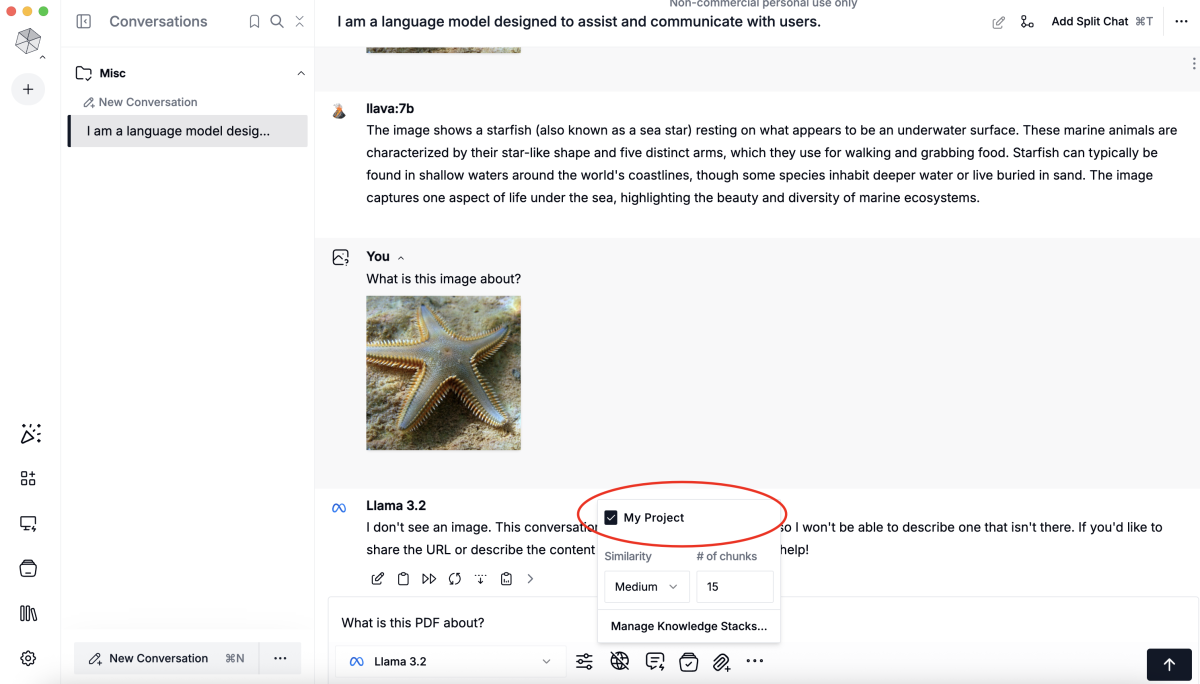

Step 5 : Using Multimodal Models and Image Input in Msty

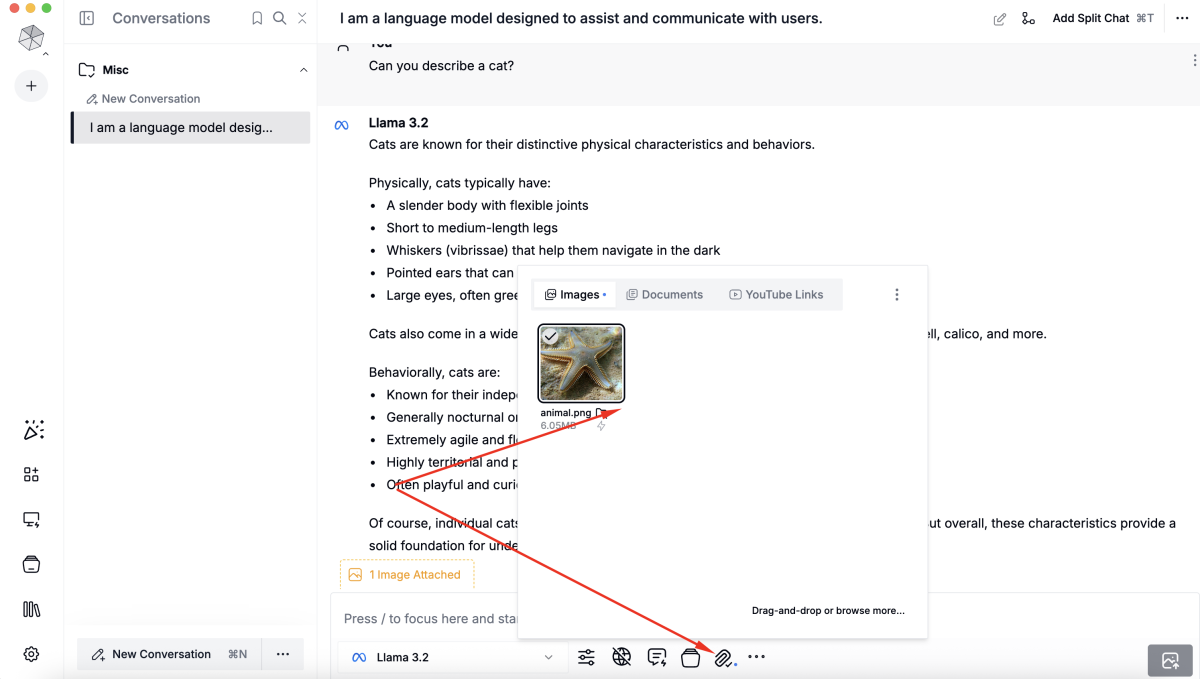

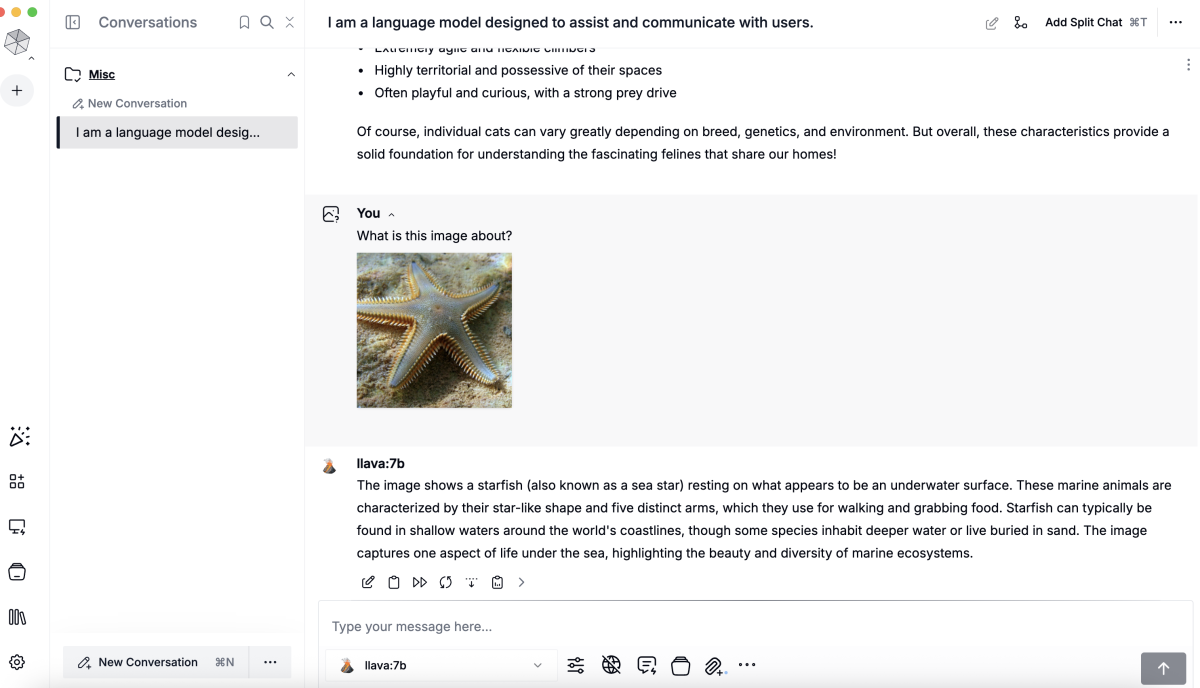

Msty supports multimodal models such as Llava 7B, which can process both images and text. To use this feature:

- Click on the "Attach images and document" icon in the Msty interface.

- Upload your image by dragging and dropping it into the chat window.

- Switch the active model to Llava (or another multimodal model).

- Ask a question such as "What is this image about?" and the model will analyze and describe the photo.

- Note: If you select a text-only model, image-based queries will not work.

Step 6 : Benefits of Local UI Integration

- Everything runs locally on your machine — your data never leaves your computer.

- No usage fees, privacy concerns, or reliance on cloud services.

- Easily switch between different models and knowledge bases as needed.

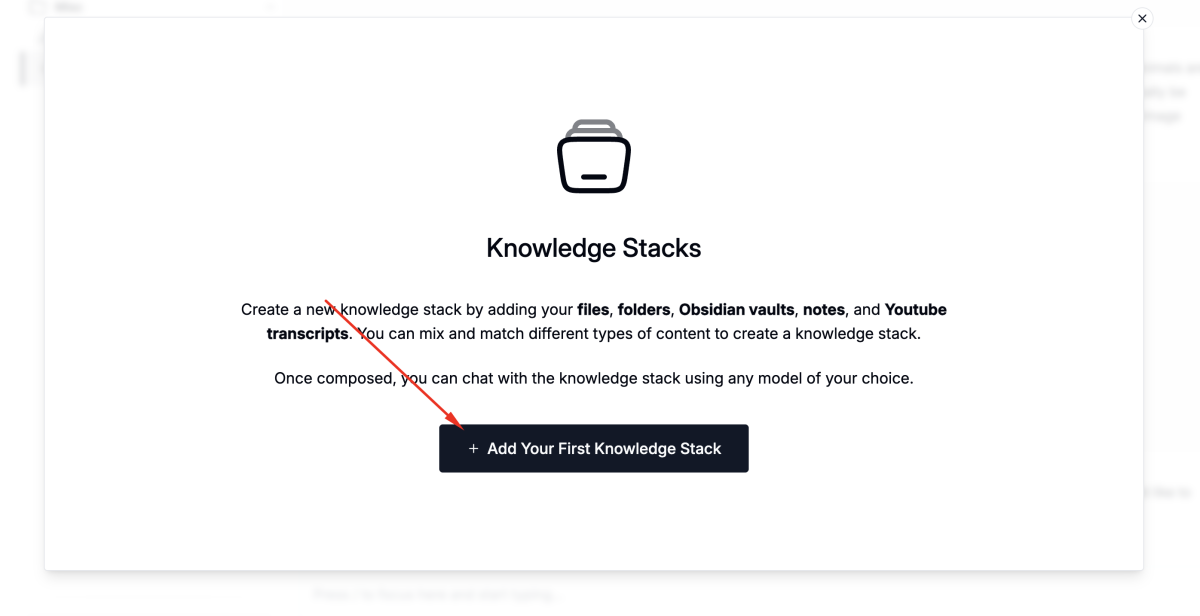

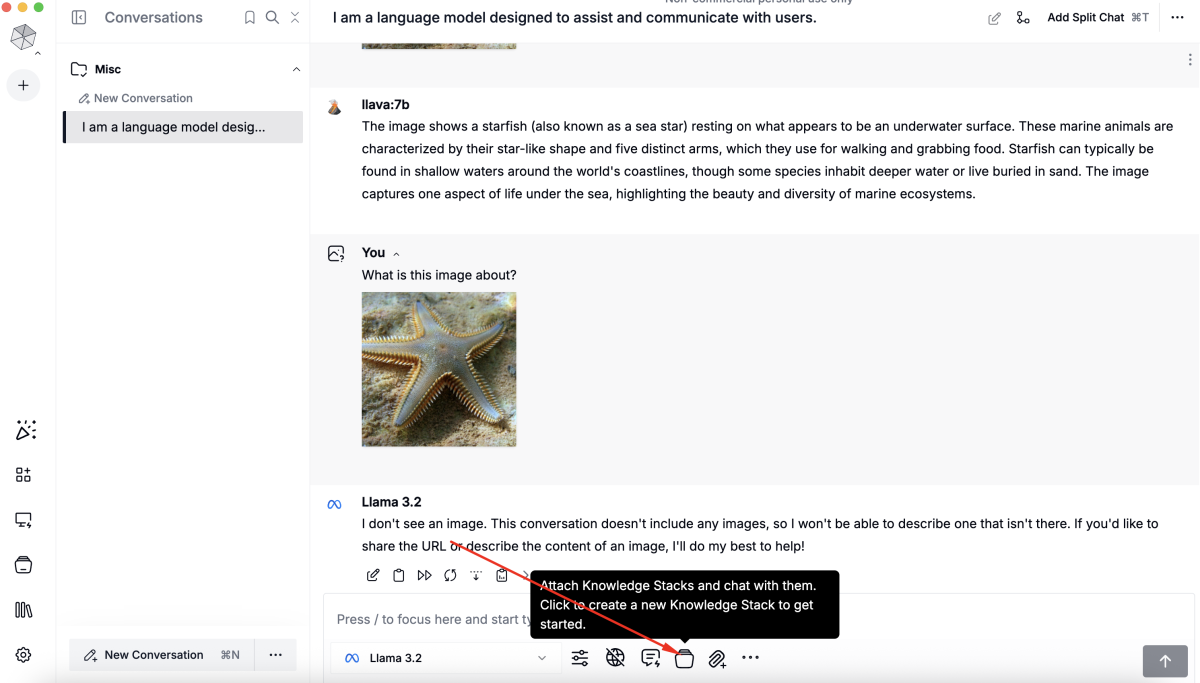

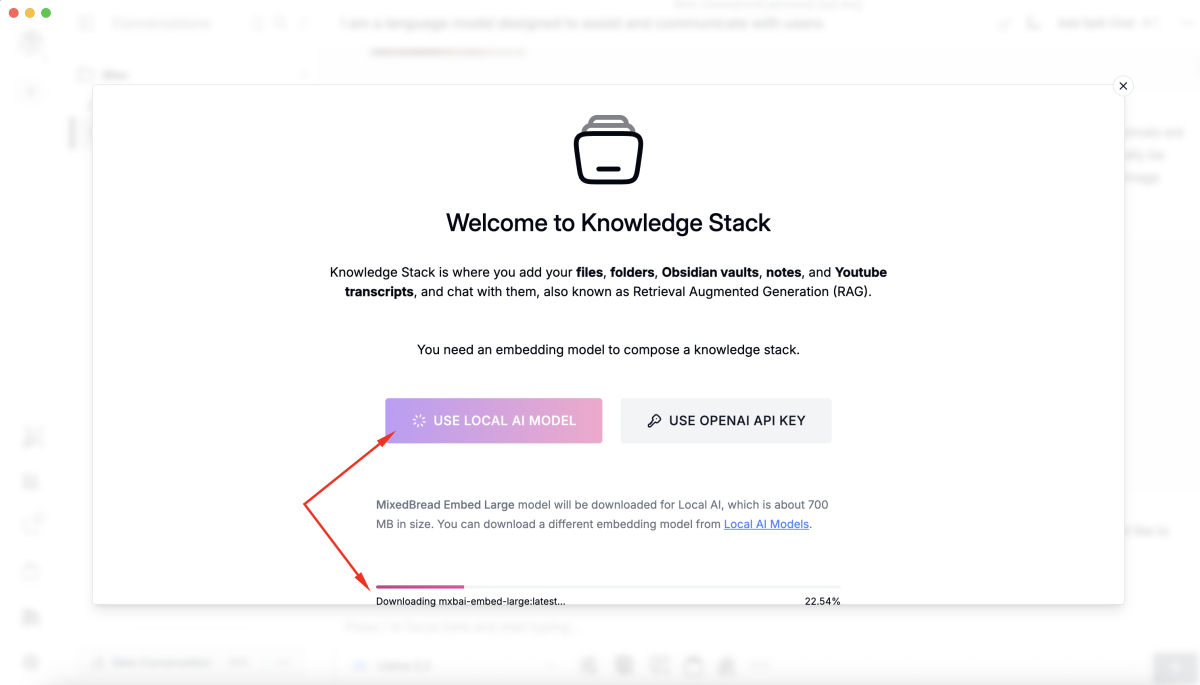

Step 7 : Using Knowledge Stack for Document-based Q&A

Msty allows you to build a Knowledge Stack to query and summarize content from your own PDF documents. Follow these steps:

- Click on the Attach Knowledge Stack button in the interface.

- The first time you use this feature, Msty will prompt "You need an embedding model to compose a knowledge stack."

- Select USE LOCAL AI MODEL to download and use the embedding model locally.

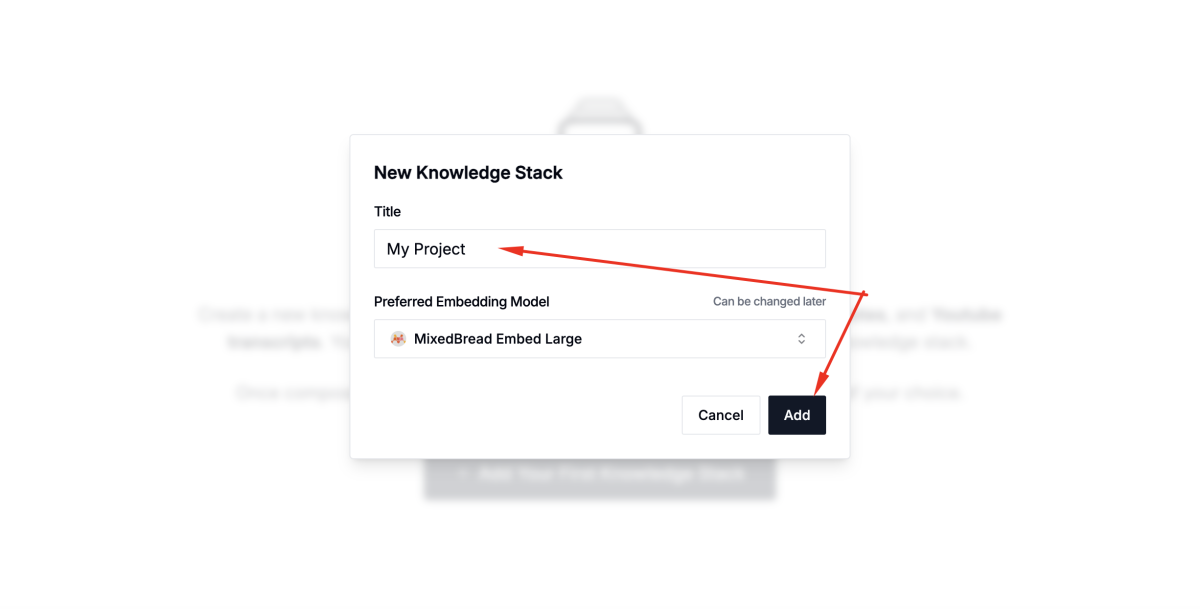

- Click Add Your First Knowledge Stack.

- Enter a title for your Knowledge Stack and click Add.

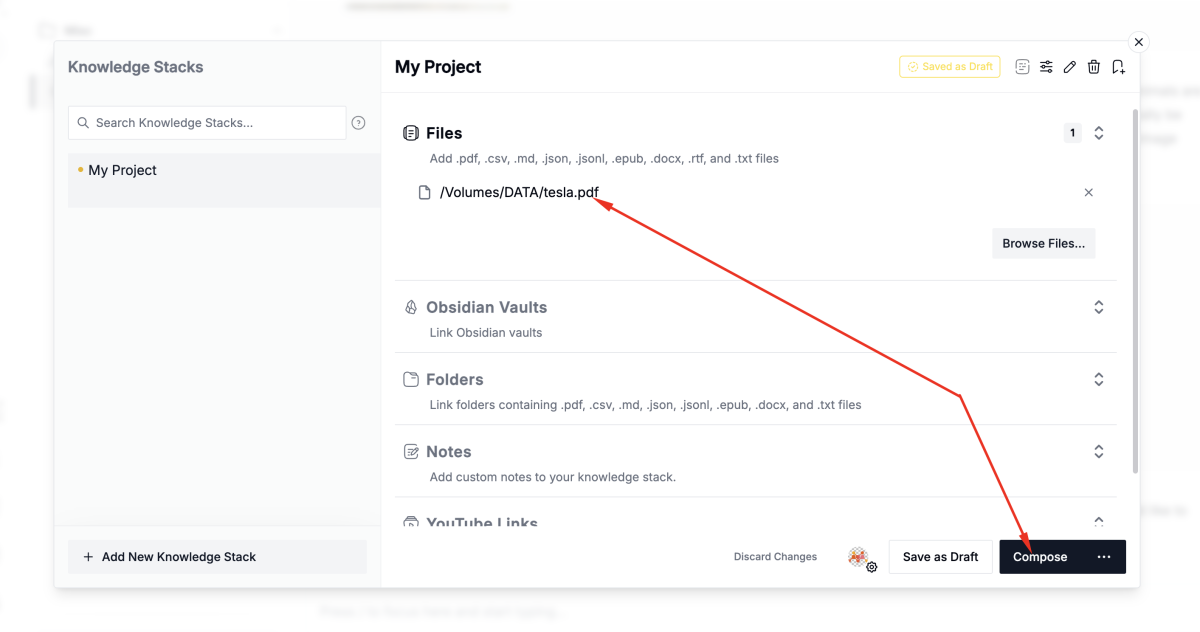

- Drag and drop your PDF file into the Knowledge Stack area, then click Compose and wait for the processing to complete.

- To ask questions about your PDF, click Attach Knowledge Stack again and select the Knowledge Stack you just created.

- Now you can enter questions such as "What is this PDF about?" or "Please summarize this PDF."

- The model will use the document content to answer your queries.

Conclusion

Integrating Ollama with third-party frontend tools like Msty brings the power of large language models to a user-friendly desktop environment. You can chat, analyze images, and query your own documents, all with the privacy and control of local processing.