With Ollama, you can easily browse, download, and test a variety of open-source language models right on your local machine. This guide walks you through the basic workflow for downloading and running a text-based model.

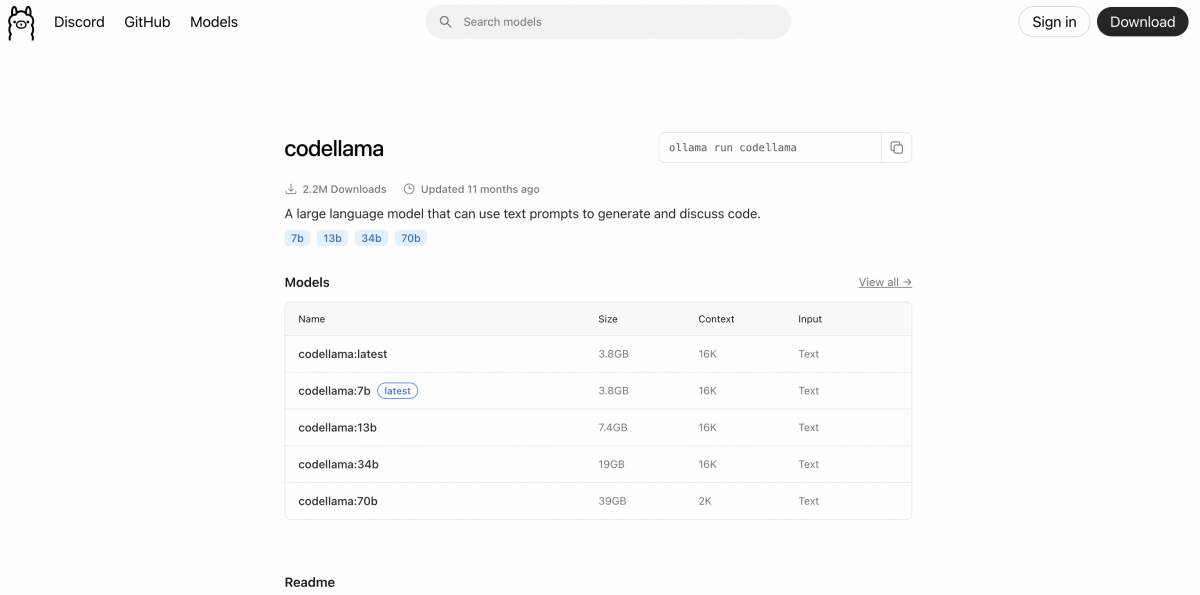

Step 1 : Find a Model

Browse the Ollama model library and choose a model you want to try. Take note of the model name (for example, mistral or codellama).

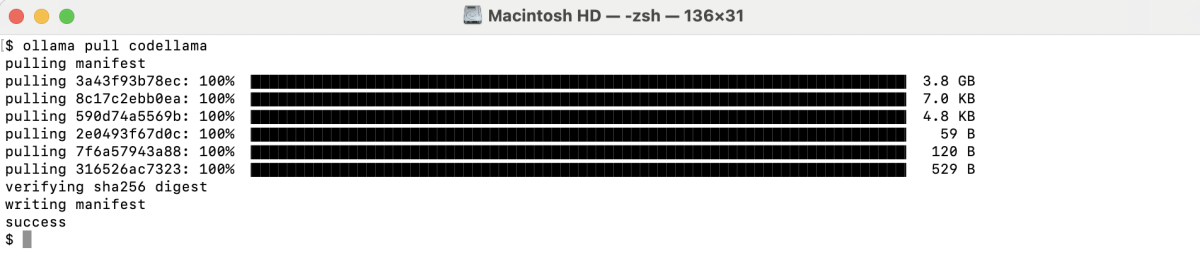

Step 2 : Download the Model

To download a model, use the ollama pull command:

The download may take a few minutes, depending on the model size and your internet speed.

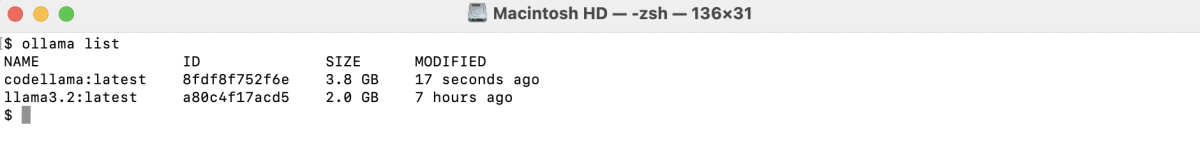

Step 3 : Verify Downloaded Models

Once the download finishes, list all locally available models with:

You'll see the model name, size, and last modified time.

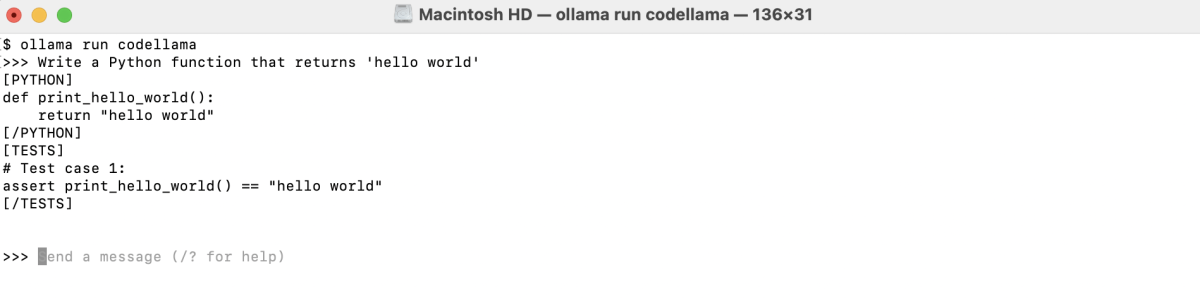

Step 4 : Run and Test the Model

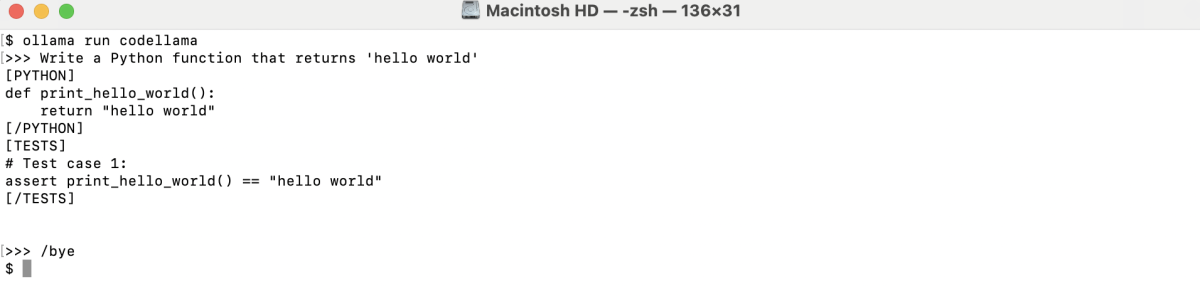

To start an interactive chat session, run:

You can now type questions (e.g., “Write a Python function that returns 'hello world'”) and the model will generate responses directly in your terminal.

Step 5 : End the Session

To exit the chat session, type /bye or press Ctrl+d.

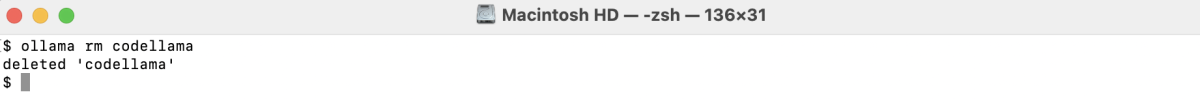

Step 6 : Remove a Model

If you want to free up space, remove a model you no longer need:

That's it! You're ready to explore and test language models with Ollama on your own machine.