Ollama provides a REST API that lets you interact with models programmatically, making it easy to generate text or have multi-turn conversations directly from your applications or scripts. This guide will show you how to use the REST API endpoints for both text generation and chat, complete with example cURL commands.

Step 1 : Understanding the Ollama REST API

When you run Ollama, it serves a REST API locally at http://127.0.0.1:11434.

This API enables you to interact with models without needing the CLI, supporting both:

- Text generation (

/api/generate) - Chat (multi-turn conversations) (

/api/chat)

Step 2 : Generating Text with the /api/generate Endpoint

The /api/generate endpoint generates a one-off response from a model based on a prompt.

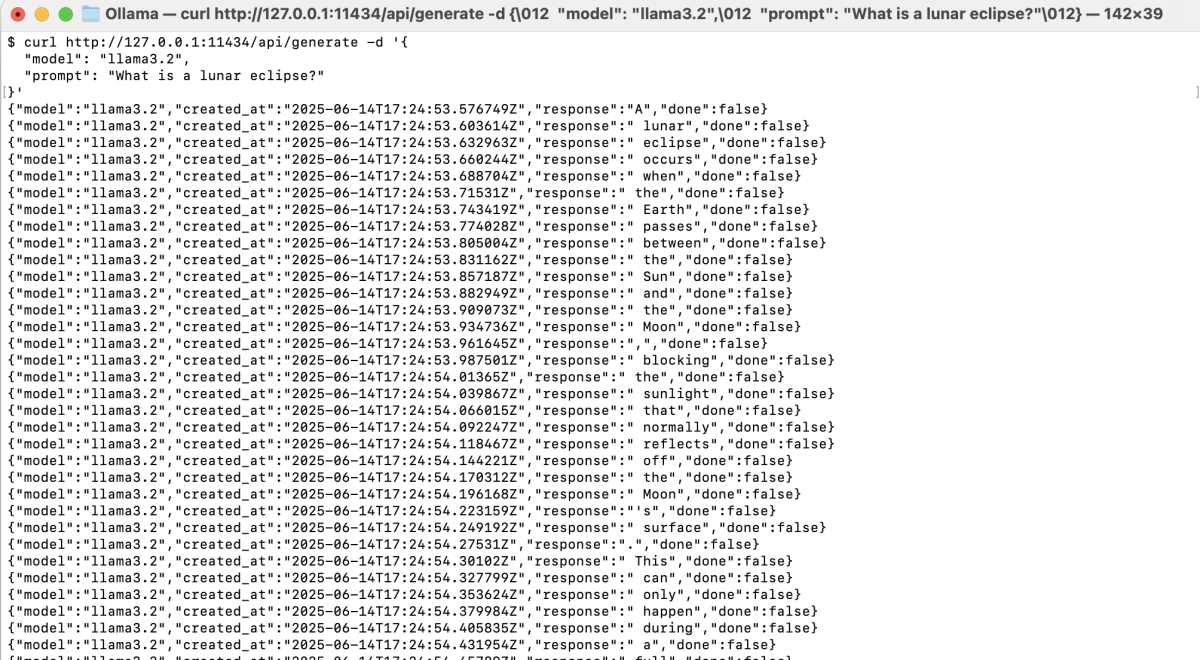

Basic cURL Example:

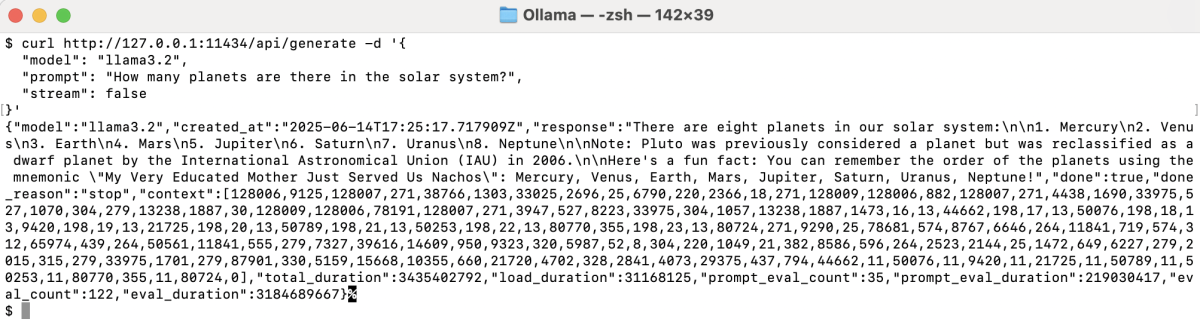

By default, the API will stream the response as a sequence of JSON objects. If you want the entire response at once (not streamed), add "stream": false to your payload:

model: Name of the model you want to use (e.g.,llama3.2).prompt: The text prompt you want the model to answer.stream: Set tofalsefor a full response in a single JSON object.

Step 3 : Requesting JSON Format Output

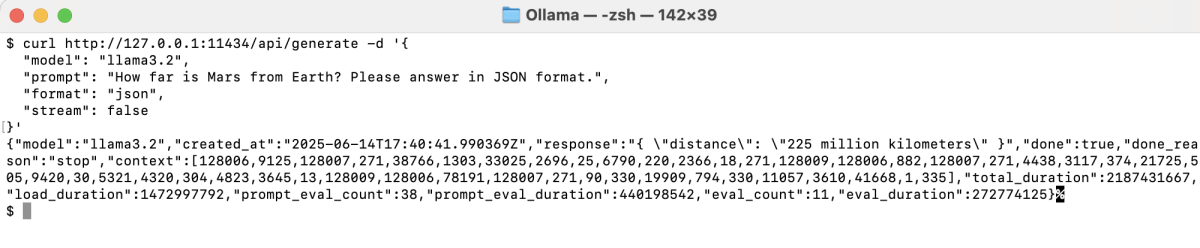

You can ask Ollama to format its response in JSON by specifying both in your prompt and by setting the format parameter in your payload.

Example:

format: Set to"json"to request a structured JSON output from the model.- Note: You should also instruct the model in your prompt to answer in JSON. This helps ensure the output is well-formed.

The JSON response will contain structured information as requested.

Step 4 : Chatting with the /api/chat Endpoint

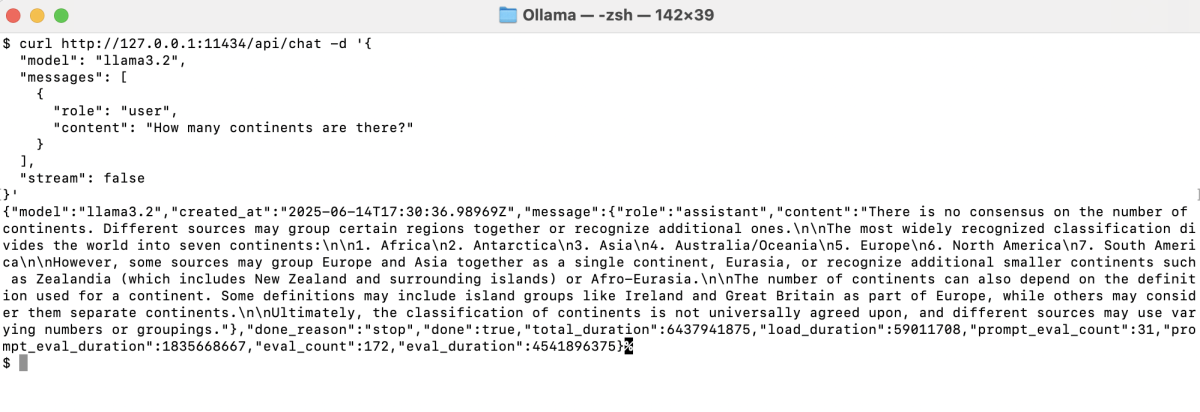

The /api/chat endpoint is designed for multi-turn conversations, allowing you to send and receive context-aware messages.

cURL Example for Chat:

messages: An array of message objects, each with arole(userorassistant) andcontent.stream: Set tofalseto get the full response at once.

With the REST API, Ollama models are easy to integrate and automate—locally and securely.